Continuing Certification Update: OLA Scoring Provides Accurate Representation of Diplomates’ Knowledge

April 2025;18(2):11

By Brooke Houck, PhD, ABR Associate Director of Assessments Research and Strategy

Online Longitudinal Assessment (OLA) is a continuous assessment program that allows diplomates to engage in learning over the course of their five-year Continuing Certification Part 3 cycle. Having an OLA passing score in the fifth year of each cycle fulfills the Part 3 requirement of Continuing Certification.

Every OLA participant has a unique assessment. It is extremely unlikely for any two participants to see the same questions, in the same order, at the same time. The system offers questions randomly, based on an algorithm that considers, among other things, the exam blueprint and the diplomate’s certification. Therefore, the formula listed below represents scoring for one specific individual and must be applied at the individual level. The questions contained in the “last 200 questions” are unique in that those questions were completed at different times than other participants and may have different difficulty ratings.

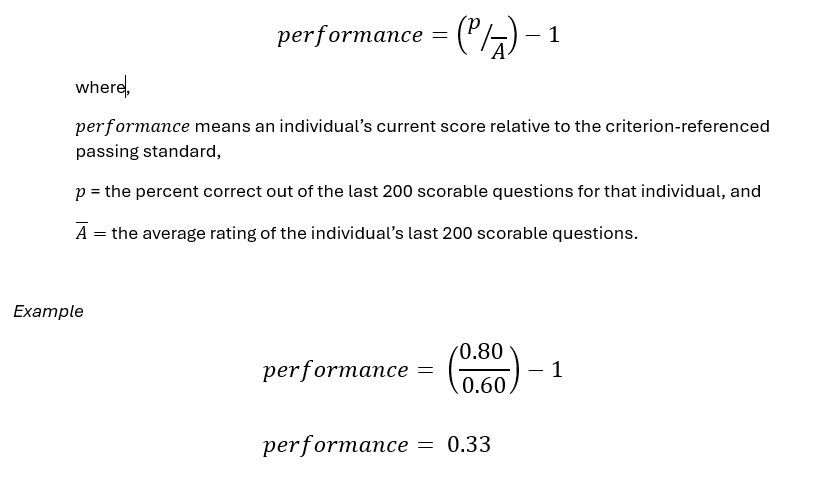

Scoring formula

In this example, the participant’s OLA performance is 0.33 above the standard. The scaled standard is zero for all participants. The participant got 80% of their last 200 scorable questions correct, and the average difficulty rating of those questions is 60%. The division of 0.80/0.60 = 1.33, where 1.33 is representative of a raw performance relative to the individual’s criterion-referenced standard. This raw performance is then subtracted from one in order to scale the performance score, resulting in a performance score of 0.33. This should be interpreted as 33% above the minimum passing standard for that individual’s last set of 200 scorable questions. Because the assessment is continuous, after 200 questions are completed, additional completed, scorable questions push out the oldest questions from the scorable set, resulting in dynamic scoring that changes to reflect participants’ ongoing performance.

Rating questions over time

Questions are continuously rated; therefore, a question is scored using the rating current to the week in which the question was taken. This is done so that someone who answers correctly on a very difficult question (has a low difficulty rating)1 gets credit for the question being difficult, even if the question becomes easier later (the difficulty rating increases).

Question difficulty is determined by the average rating given by question raters in OLA. For a question to be scorable, it must be rated at least 10 times; however, ratings are not capped. Over time, the number of ratings for each question grows, changing the question rating over time as new ratings are incorporated. A question might “become easier” over time if the additional ratings greatly increase the question’s difficulty rating. In testing, it is not uncommon for a question’s statistical properties (question difficulty or discrimination) to change over time; this is known as parameter drift and is something that the ABR monitors on all our assessments.

Using the example given previously, if we change the mean difficulty rating to 0.70 (70%), OLA performance is 0.14 (14% above the standard).

The change in the average difficulty rating from 0.60 to 0.70 (i.e., 60% to 70%), while keeping the percent correct at 80%, changes the OLA performance score from 33% above the standard to 14% above the standard. The change of difficulty to 0.70 means that, on average, the questions were easier than the set of questions with an average difficulty rating of 0.60.

Using last 200 scorable questions

OLA is designed to be a flexible assessment wherein participants can both learn from incorrect answers and be assessed for the Part 3 component of Continuing Certification. Using only the last 200 scorable questions provides participants with the opportunity to learn and improve over the course of the five-year Part 3 cycle. Additionally, it improves measurement precision because diplomates are more familiar with the software the longer they use it. This means that performance, as defined by the last 200 scorable questions, always represents the best attempt at mitigating measurement error due to lack of knowledge of the software. It also provides a performance score most closely tied to current knowledge, skills, and abilities by using the most recent 200 scorable questions.

1The rating value is inversely related to difficulty (i.e., a high rating indicates an easy question).